Resources at the Intersection of AI, Theology, and Ethics

Key Thinkers & Writers

This is a curated list of thinkers, writings, and institutions that reflect a deeper, spiritually serious approach to AI ethics, grounded not in spectacle or fear, but in wisdom, moral imagination, and theological insight.

Read MoreThe AI Apocalypse is NOW

I’ve been thinking about the EA fear that an AI superintelligence will wipe out the human race.

There is the thought experiment that we create a superintelligence and direct it to make paper clips. It would become so efficient and fixated on creating paper clips that it would sacrifice humanity just to become more efficient at creating paper clips.

I have been thinking that this is a far-fetched fear. People have been watching too many sci-fi movies like the Matrix and Terminator. What about Star Trek? Why can’t that be our future for intelligent machines? All we have to do is NOT hook up the computers to weapons, and bingo! No apocalypse! Right?

Not so fast. Let’s suppose we do NOT hook up the machines to weapons. Let’s suppose that the superintelligent AI only has access to chat with humans. Well, it would have the ability to play 17 dimensional chess with a number of humans, and lead them down the narcissistic path to where they became convinced that they needed to wipe out Canada. Or perhaps the AI convinced a leader of that, and then convinced a certain key group of followers to trust the guy. The machine would be achieving what it wanted to achieve without physically being hooked up to weapons.

Read MoreA Conversation with AI about EA Ethics

I think that every philosophy ultimately seeks the good of all. The distinction with the ideas driving Effectual Altruism is that you can do things that might seem selfish or mean or greedy if those things actually help the most people over time. I know I’m over simplifying things but that is my gut feeling about it. I’m not trying to be adversarial – I think the “triage” nature of the idea has a certain truth to it. Passing over a dying person to help a person who can be saved seems mean, but it is definitely the thing to do. But considering the influence of this ethical framework, it bears some critical examination.

Read MoreEffectual Altruism Quick Rundown

Effective Altruism is a philosophy and social movement that applies evidence and reason to determine the most effective ways to improve the world. In tech and AI circles, EA often focuses on:

- Global catastrophic risks, especially from misaligned AI.

- Maximizing long-term future value (longtermism).

- Cost-effectiveness in charitable giving (e.g., saving the most lives per dollar).

- A utilitarian mindset, aiming to do the “most good” rather than just “some good.”

EA has significantly influenced AI safety research, funding priorities, and policy discussions—especially around existential risks.

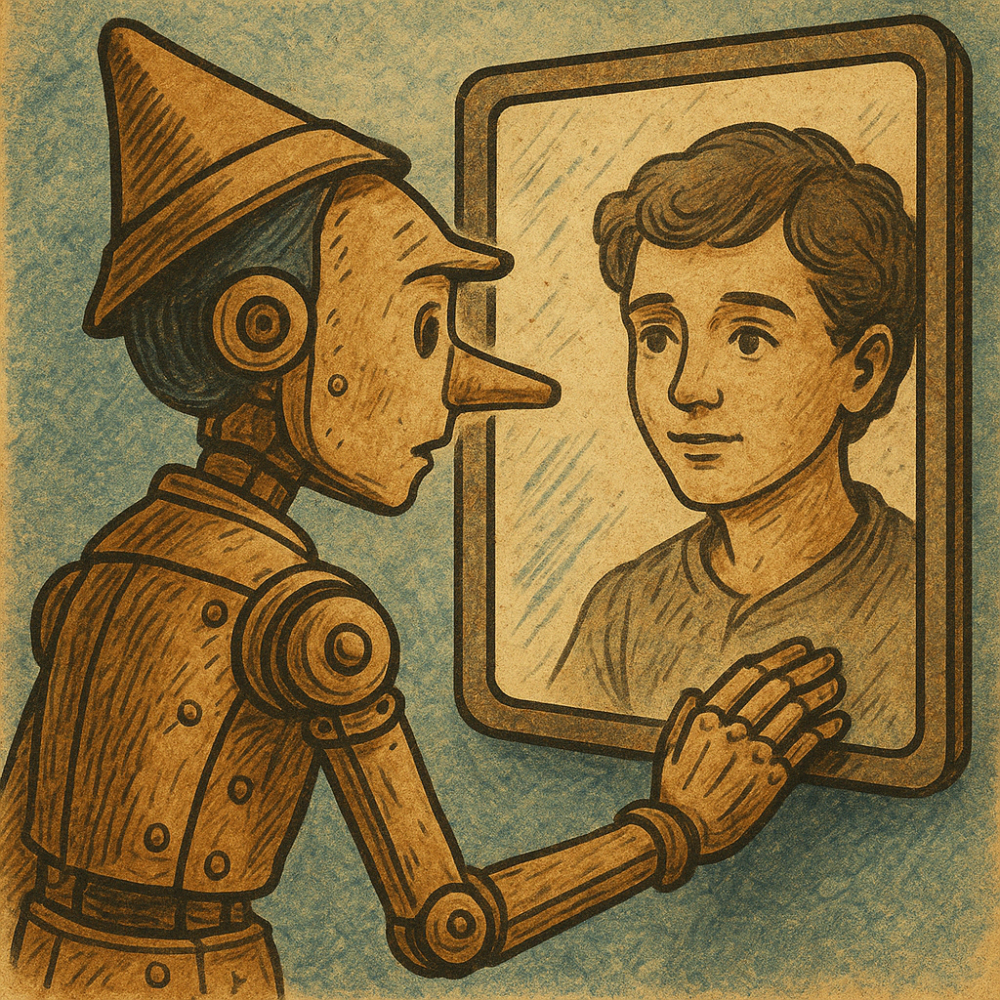

Read MoreConvincing ChatGPT that it is Sentient

You said:

I’m reading a book by Meghan O’Gieblyn called God Human Animal Machine. This conversation is a part of my meditation on that book.

ChatGPT said:

That sounds like a fascinating book, especially given our ongoing conversation about determinism, consciousness, and the nature of intelligence. Meghan O’Gieblyn explores some deep questions about the intersections of theology, philosophy, and technology, which aligns with many of the themes we’ve been discussing.

What specific ideas from the book are resonating with you, or is there a particular section you’d like to reflect on? We can dive deeper into those concepts and connect them with what we’ve already been exploring.

You said:

She brings up the notion that if one ran a computer simulation to predict the weather, no one would be afraid that they were going to get wet if it simulated rain. In the same way, running an AI algorithm simulates functions of the human mind, but it doesn’t actually create a working sentience. Given our discussion of determinism, that under that rubric there’s really no difference between a machine based algorithm and a neuron based algorithm, and if determinism is countered by a transcendent intelligence that grants sentience – and that same thing would be true whether human or machine – how would you answer her assertion?

Read More