Effectual Altruism Quick Rundown

Effective Altruism is a philosophy and social movement that applies evidence and reason to determine the most effective ways to improve the world. In tech and AI circles, EA often focuses on:

- Global catastrophic risks, especially from misaligned AI.

- Maximizing long-term future value (longtermism).

- Cost-effectiveness in charitable giving (e.g., saving the most lives per dollar).

- A utilitarian mindset, aiming to do the “most good” rather than just “some good.”

EA has significantly influenced AI safety research, funding priorities, and policy discussions—especially around existential risks.

Philosophical/Theoretical Proponents of EA

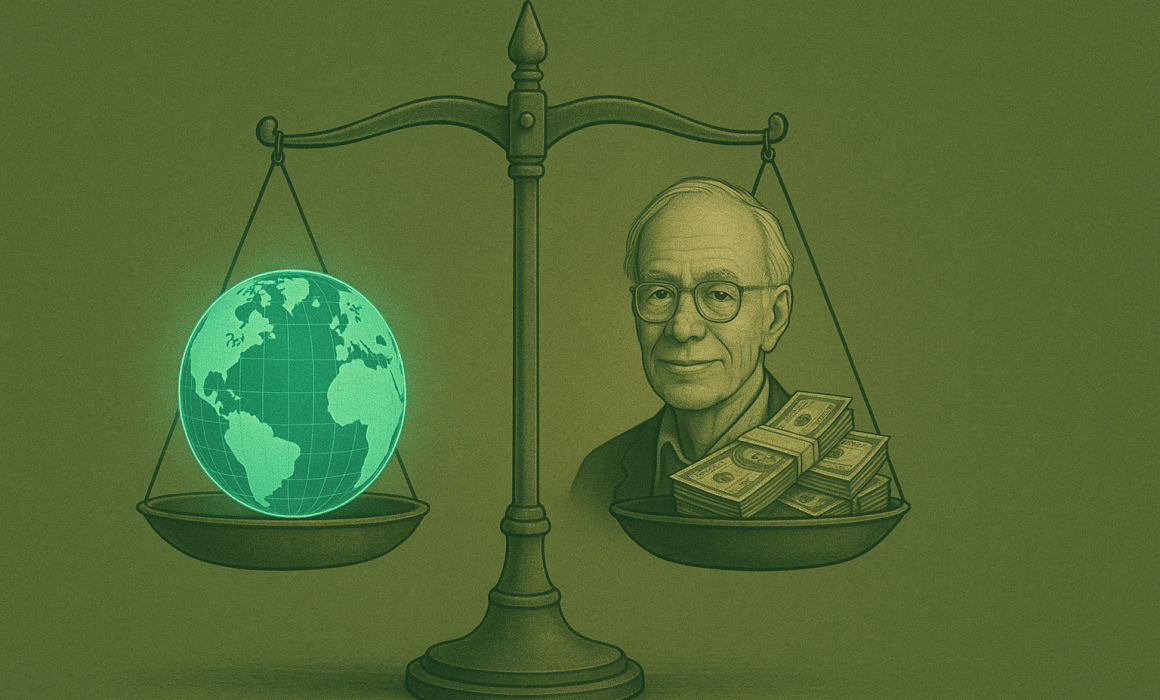

1. Peter Singer

- Often credited as the intellectual founder of EA.

- His book The Life You Can Save (2009) laid the groundwork:

If you can prevent suffering without sacrificing something morally significant, you ought to. - Advocates for global poverty reduction, animal welfare, and maximizing the good through donation and action.

2. William MacAskill

- A co-founder of the Effective Altruism movement.

- Author of Doing Good Better and What We Owe the Future (which champions longtermism).

- Promotes cause prioritization, moral impartiality, and focus on far-future impacts like AI risk.

3. Toby Ord

- Philosopher at Oxford, author of The Precipice.

- Focuses on existential risks, including unaligned AI, pandemics, and climate change.

- Argues that preserving the long-term potential of humanity is the most urgent moral issue.

Philosophical/Theoretical Critics of EA

There are several prominent critics of Effective Altruism (EA), coming from both philosophical and practical angles. Here are a few notable ones and their core critiques:

1. Amia Srinivasan (Oxford philosopher)

Critique:

- EA’s utilitarian focus ignores structural injustice and historical context.

- It treats ethical decisions like optimization problems, ignoring the moral weight of relationships, community, and duty.

- Charity, in EA, becomes an impersonal technocratic act, disconnected from solidarity or systemic change.

2. Olúfẹ́mi Táíwò (Georgetown philosopher, author of Elite Capture)

Critique:

- EA, especially its longtermist strand, can be a form of “elite capture”—wealthy individuals using moral philosophy to justify controlling global priorities.

- It shifts attention away from present-day injustices and gives power to those already privileged.

3. Thomas Nagel

Critique (indirect, via critiques of consequentialism):

- EA relies heavily on utilitarianism, which Nagel finds problematic due to its disregard for moral constraints, individual rights, and personal projects.

- He sees this as overly rationalistic and detached from the richness of real moral life.

4. Liam Kofi Bright & David Thorstad

Critique:

- EA sometimes suffers from epistemic hubris—pretending we can calculate distant future outcomes or fully understand complex moral tradeoffs.

- Its push for expected value maximization in uncertain areas (like AI risk) can be misleading and misallocate resources.

5. Byung-Chul Han (though not directly targeting EA)

Relevant critique:

- In The Burnout Society and Saving Beauty, he critiques the modern drive toward efficiency, optimization, and moral quantification—all EA trademarks—as dehumanizing and spiritually hollow.

He argues this leads to the loss of otherness, contemplation, and genuine ethical encounter.

Prominent Public Figures & Donors Influenced by EA

1. Sam Bankman-Fried (SBF)

- Claimed EA as his ethical motivation, especially through the idea of “earning to give” (make lots of money, donate most of it).

- Was closely tied to EA institutions before his fall from grace.

- His collapse sparked internal EA criticism about means-justifying-the-ends reasoning and lack of moral guardrails.

2. Dustin Moskovitz & Cari Tuna

- Facebook co-founder and his wife.

- Fund Open Philanthropy, one of the largest EA-aligned funding bodies.

- Have donated hundreds of millions to causes like global health, AI safety, and criminal justice reform.

3. Elon Musk (influence, not formal affiliation)

- Shares EA-aligned concerns about AI risk, existential threats, and colonizing Mars as a safeguard for humanity’s future.

- Not an EA per se, but often cited or praised within EA-aligned discussions.

4. Holden Karnofsky

- Co-founder of GiveWell and Open Philanthropy.

- Major voice within EA, especially in discussions on strategic giving and longtermist prioritization.

EA Influenced Organizations

EA has become especially influential in AI research, biosecurity, policy circles, and among young technologists, particularly those with a STEM background and interest in moral rationalism.

Here’s a breakdown of major EA-aligned organizations, grouped by their primary focus:

1. Global Health & Development

GiveWell

- Rigorously evaluates charities for cost-effectiveness (e.g., cost per life saved).

- Recommends organizations like Against Malaria Foundation, Helen Keller International, and SCI Foundation.

The Life You Can Save

- Founded by Peter Singer.

- Promotes effective giving, with curated lists of high-impact charities.

GiveDirectly

- Not strictly EA-founded, but widely supported within EA.

- Gives unconditional cash transfers directly to people in poverty.

2. Longtermism & Existential Risk

Future of Humanity Institute (FHI)

- Founded by Nick Bostrom at Oxford.

- Researches existential risks (especially AI), future ethics, and global priorities.

Centre for the Study of Existential Risk (CSER)

- Based at Cambridge.

- Studies risks that could threaten humanity’s survival, including AI, pandemics, and nuclear war.

Open Philanthropy

- Major EA funder (from Dustin Moskovitz & Cari Tuna).

- Funds causes across AI safety, biosecurity, criminal justice reform, and global health.

- Arguably the most powerful institution in EA today.

Centre for Effective Altruism (CEA)

- Supports EA community building, conferences, and local groups.

- Organizes EA Global conferences and maintains effectivealtruism.org.

3. AI Safety

Anthropic

- Founded by ex-OpenAI employees.

- EA-aligned with a focus on safe and interpretable AI models.

- Received large funding from EA-aligned sources like Open Philanthropy.

Alignment Research Center (ARC)

- Independent org focused on technical AI alignment.

Center for AI Safety

- A public-facing org advocating for safe AI development and global coordination.

4. Meta/Community-Oriented

80,000 Hours

- Provides career guidance with the goal of doing the most good through your job.

- Focuses on AI safety, biosecurity, global priorities research, and policy as high-impact career paths.

Effective Altruism Forum

- Main platform for longform discussion and critique within the EA community.

Central Hubs of Influence

Here’s an overview of how EA organizations are interconnected, particularly through funding, leadership, and ideological alignment. It helps to think of this as a network hub-and-spoke model, with a few central funders and thinkers shaping most of the ecosystem.

1. Open Philanthropy (OpenPhil)

- Funding nucleus of the movement.

- Funded by Dustin Moskovitz & Cari Tuna (multi-billion dollar commitment).

- Provides major grants to:

- GiveWell

- 80,000 Hours

- Centre for Effective Altruism

- FHI, CSER, and various AI safety orgs like Anthropic, Redwood Research, and ARC.

- GiveWell

- Formerly closely aligned with Holden Karnofsky, a key strategic voice in EA.

2. Effective Ventures Foundation (EVF)

- Umbrella org that houses many EA projects:

- 80,000 Hours

- Centre for Effective Altruism (CEA)

- Giving What We Can

- Formerly included FHI before Oxford severed ties in 2023.

- 80,000 Hours

- Registered in both the UK and US as a nonprofit.

- Took PR damage post-SBF due to past funding from FTX Foundation.

Ideological and Leadership Links

Philosophers and Founders

- William MacAskill, Toby Ord, and Nick Bostrom are the philosophical bedrock of EA.

- MacAskill co-founded:

- 80,000 Hours

- CEA

- Giving What We Can

- Was a major supporter of SBF and the FTX Foundation.

- 80,000 Hours

- Nick Bostrom founded FHI and shaped existential risk studies and longtermism.

Career Funnel

- 80,000 Hours acts as a talent pipeline, encouraging bright young professionals to work in:

- AI safety

- biosecurity

- global priorities research

- AI safety

- Many follow-on hires and grant recipients emerge from this community.

Former Influence: FTX Foundation

- Run by Sam Bankman-Fried and aligned with EA until the 2022 collapse.

- Poured millions into:

- AI safety orgs

- pandemic preparedness

- EA community building

- AI safety orgs

- Its downfall prompted major crisis of trust, with retrospection on due diligence, moral blind spots, and the risks of “ends justify the means” thinking in EA.

Criticisms of the Network

Critics argue that:

- There is groupthink and centralized control over what counts as “effective.”

- Funding flows are opaque, with a small elite controlling much of the ecosystem.

- It privileges tech-centric, Western, white male perspectives, especially in longtermism and AI risk.

- The same people sit on multiple boards, blurring lines between critique, strategy, and compliance.